GitHub - sooftware/speech-transformer: Transformer implementation speciaized in speech recognition tasks using Pytorch.

Transformers for Natural Language Processing: Build innovative deep neural network architectures for NLP with Python, PyTorch, TensorFlow, BERT, RoBERTa, and more: Rothman, Denis: 9781800565791: Amazon.com: Books

pytorch - Calculating key and value vector in the Transformer's decoder block - Data Science Stack Exchange

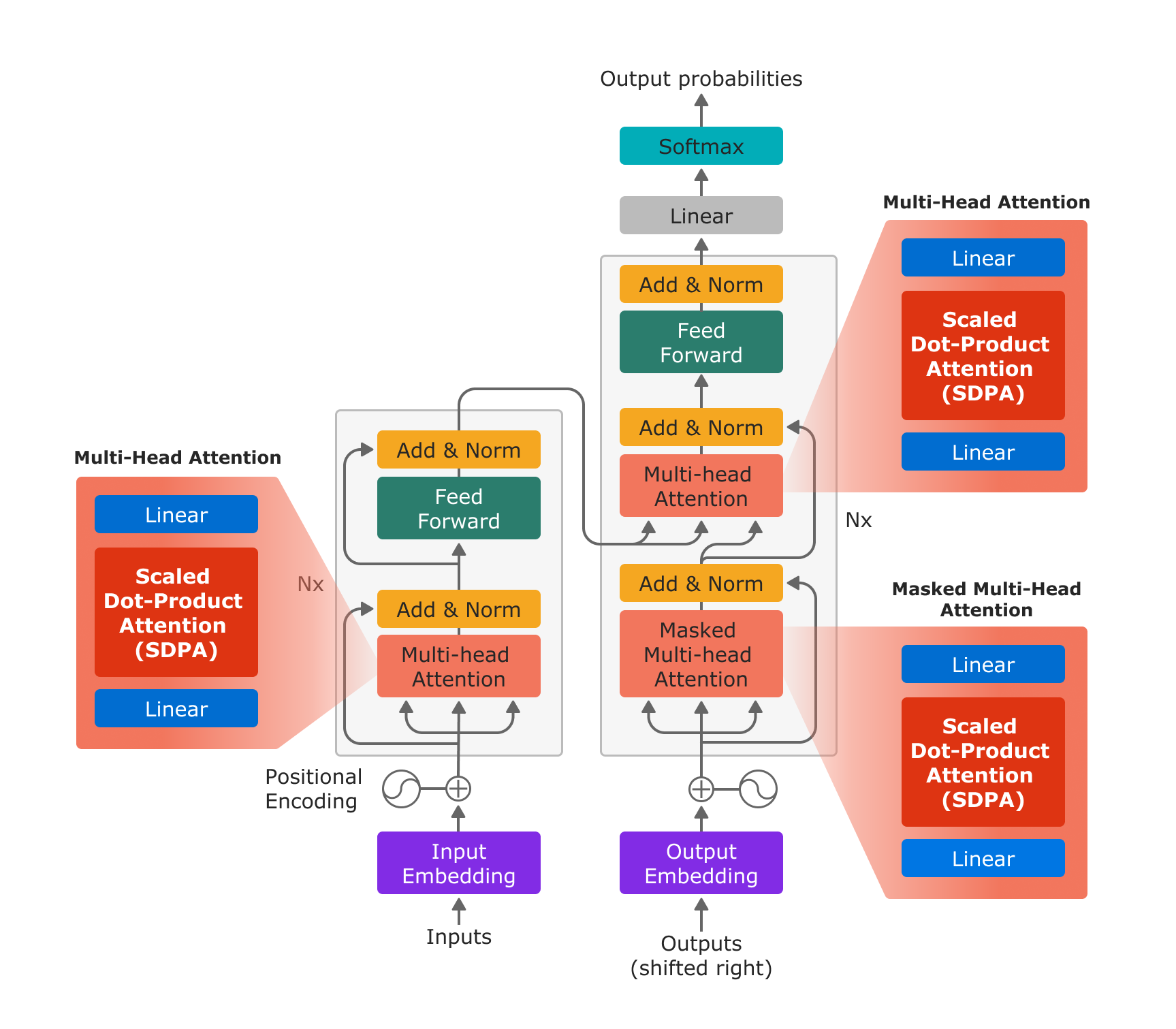

GitHub - gordicaleksa/pytorch-original-transformer: My implementation of the original transformer model (Vaswani et al.). I've additionally included the playground.py file for visualizing otherwise seemingly hard concepts. Currently included IWSLT ...

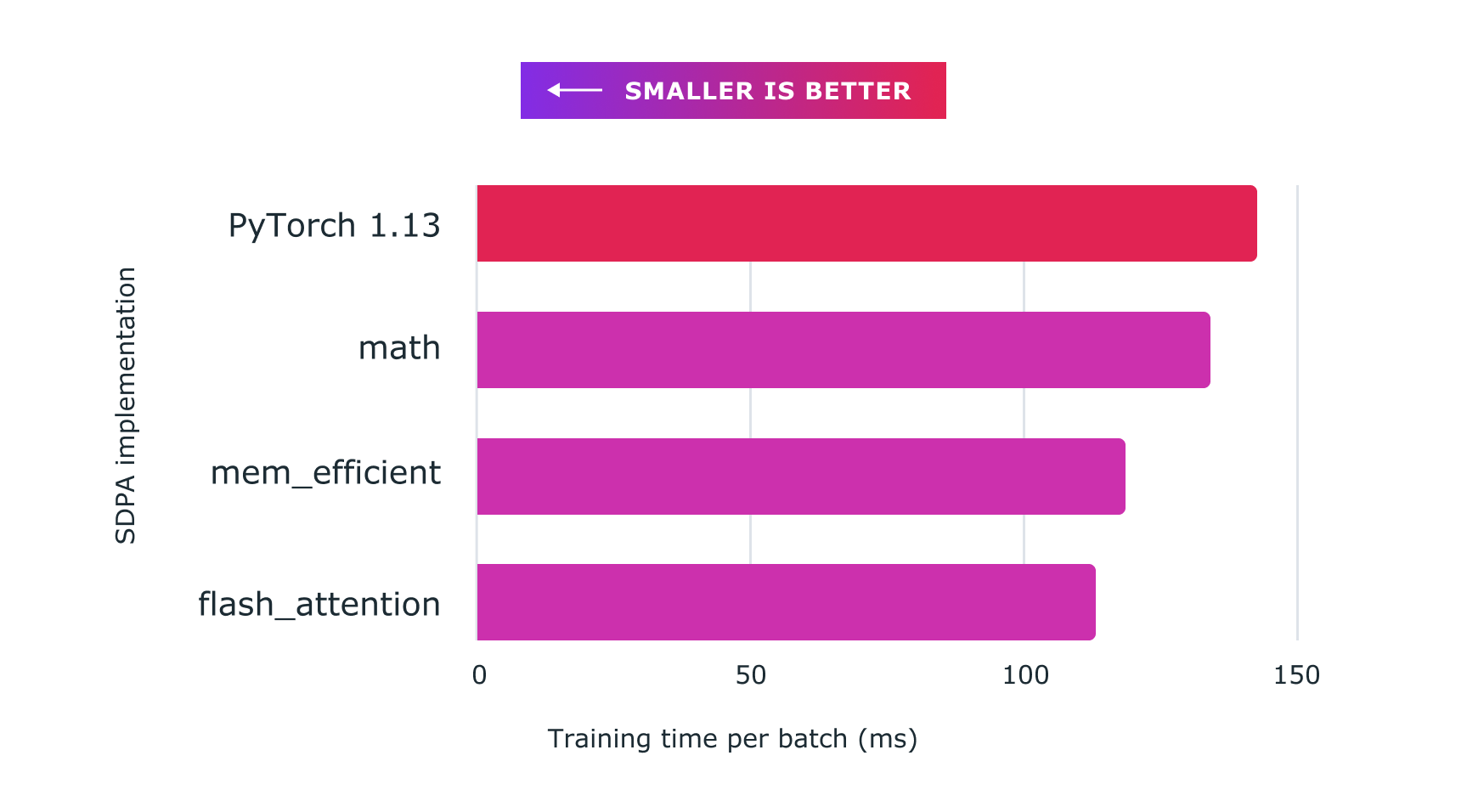

PipeTransformer: Automated Elastic Pipelining for Distributed Training of Large-scale Models | PyTorch